CSOPESY_04_processes_and_threading_wip

This note has not been edited yet. Content may be subject to change.

this is a combination of 04 - Processes and whatever slides sir used during class, as of writing (10/08/2025) sir hasn't uploaded these slides

Processes

- a process is a program in execution

- a program is a passive entity (like a file on a disk), while a process is an active entity

- a single program (ex. web browser) can generate multiple processes to handle different tasks like separate tabs, each with its own state and resources

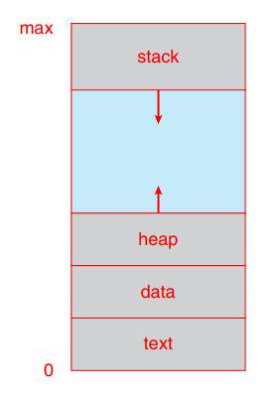

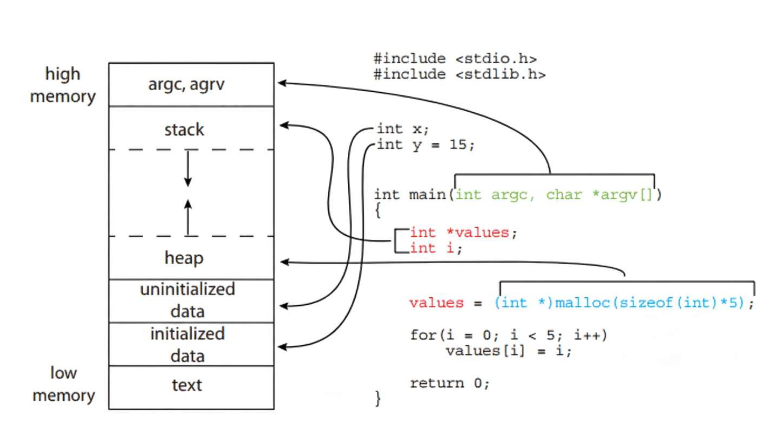

Process Components

a process is composed of several key sections:

- text section: contains program code

- data section: holds global variables

- heap: used for dynamically allocated memory

- stack: temporary data storage area for function parameters, return addresses, and local vars

Memory layout of a C program

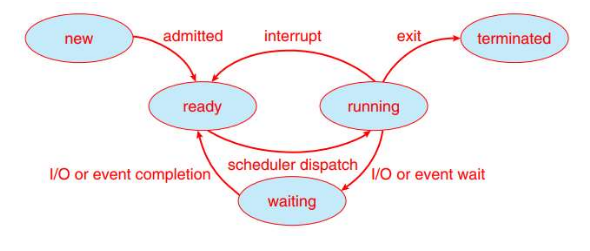

Process State

- as a process executes, its state changes, which is defined by its current activity

States: - New: process is being created

- Running: instructions are being executed by the CPU

- Waiting: process is waiting for some event to occur (i.e. I/O completion or a signal)

- Ready: process is waiting to be assigned to a processor

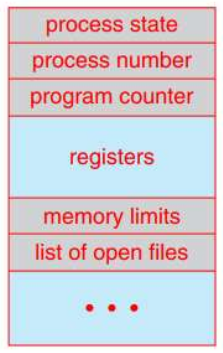

Process Control Block (PCB)

- also known as a task control block, is a data structure in the operating system kernel that contains all the information needed to manage a specific process

- it's the central repository for a process's state and is essential for the OS to perform a context switch

- GPT: A context switch is the process by which an OS saves the state of a currently running process and loads the state of a another process so that it can be executed; occurs when the CPU switches from running one process/thread to another.

- GPT:

- save current process's CPU state into PCB

- choose next process via CPU scheduler

- load chosen process's CPU state from PCB

- update CPU to continue execution from where that process left off

- holds information about a process including:

- Process State: current state of the process (New/Running/Waiting/Ready/Terminated)

- Program Counter (PC): contains the address of the next instruction to be executed

- CPU registers: the values of all CPU registers, which must be saved and restored during a context switch

- Memory-management Information: pointers to the page tables or segment tables for the process

- Accounting Information: CPU time used, time limits, and job numbers

- I/O Status Information: a list of I/O devices allocated to the process and a list of open files

next section is Threads, Benefits of Multithreading, Multithreading Models

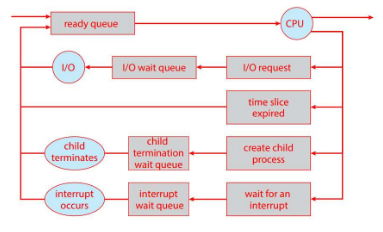

Process Scheduling

- the primary goal of a process scheduler is to select an available process for execution on the CPU.

- the OS uses various queues to manage processes:

- job queue: holds all processes in the system

- ready queue: holds all processes that are in main memory and are waiting for the CPU

- device queues: hold processes that are waiting for an I/O device

Schedulers

- Long-term Scheduler (Job Scheduler): selects processes form storage and loads them into the ready queue.

- controls the degree of multiprogramming (number of processes in memory)

- invoked infrequently and is responsible for selecting a good mix of I/O-bound and CPU-bound processes

- Short-term Scheduler (CPU Scheduler): selects from the processes in the ready queue and allocates the CPU to one of them

- invoked very frequently and must be fast

- Medium-term Scheduler: some OS also use a medium-term scheduler to remove processes from memory (swapping) and reintroduce them later

Context Switch

- the process of saving the state of the current running process and restoring the state of the next process to be run

- The Process: when a context switch occurs, the OS saves the PCB of the old process and loads the PCB of the new one.

- includes saving and restoring the program counter, CPU registers, and other process state information

- Overhead: this is a pure overhead operation; as the system does no useful work during this time.

- the speed of a context switch depends on hardware support, such as the number of CPU registers

Operations on Process

Process Creation

- Parent-Child Relationship: a process can create a child process using a system call such as

fork()- the creating process is the parent process

- the new process is the child process

- Resource Sharing: the parent and child processes can share resources in several ways

- the parent and child share all resources of the parent

- the child process shares a subset of the parents resources

- the parent and child share no resources at all

- Execution Options: the parent and child processes can execute in 1 of 2 ways:

- Concurrent Execution: the parent continues to execute concurrently with its children

- Parent Waits: the parent waits until one or all of its children have terminated before continuing

- Address Space: the child process's address space can be a duplicate of the parent's or a new program can be loaded into the child's address space

- the

fork()andexec()system calls in UNIX-like systems are a prime example of this model

- the

Process Termination

- Normal Termination: a process terminates when it finishes executing its last statement and uses the

exit()system call to request termination- returns a

statusvalue from the process to its parent

- returns a

- Parent-Initiated Termination: a parent process can terminate one of its children for several reasons:

- the child has exceeded its resource limits

- the task assigned to the child is no longer needed

- the parent is terminating, and the OS does not allow a child to continue if its parent terminates

- Cascading Termination: when a parent process terminates, some OS automatically terminate all its child processes as well

- Zombie and Orphan Processes:

- a zombie process: a child that has terminated but whose parent has not yet called

wait()to collect its exit status - orphan process: a child whose parent has terminated without calling

wait()- orphans are adopted by the init process, which collects their status and prevents them from becoming zombies

- GPT: the init process is the very first process started by the operating system kernel after the system boots and plays a crucial role in managing other processes

- a zombie process: a child that has terminated but whose parent has not yet called

Interprocess Communication

Cooperating vs Independent Processes

- independent don't share data, while cooperating does

- Interprocess Communication (IPC): provides a mechanism for cooperating processes to exchange data

Benefits of IPC

- information sharing: multiple apps can work on the same data

- computation speedup: a task can be broken into subtasks and run in parallel on multiple cores

- modularity: a system can be designed with different functions running in separate processes

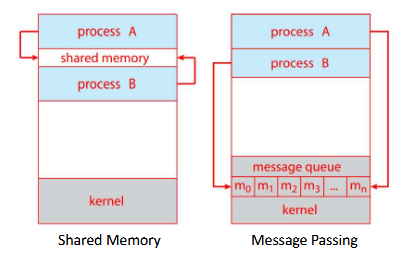

Models of Communication in IPC

- Shared Memory

- processes establish a shared memory region

- the OS is only involved in setting up this region; once established, communication is extremely fast as its done directly in memory without kernel intervention

- Message Passing

- processes communicate by exchanging messages

- useful for exchanging smaller amounts of data and is the primary model for communication in distributed systems

- the OS provides system calls like

send()andreceive()

Communication in Client-Server Systems

- Sockets

- a standard way to implement IPC over a network

- a socket is identified by a combination of an IP address and a port number

- sockets can be either connection-oriented (TCP) or connectionless (UDP)

- Remote Procedure Calls (RPC)

- a mechanism that allow a process to call a procedure (or function) on a remote machine as if it were a local function call

- the RPC system handles all the details of a network communication, packaging the call and its parameters into a message and sending it to the server

- the client is typically blocked until the result is returned

Examples of IPC systems

Interprocess Communication Systems

- Pipes: Pipes are a simple form of IPC that allows processes to communicate

- Ordinary Pipes: These are one-way (unidirectional) and can only be used between a parent and a child process

- Named Pipes: Bidirectional and can be used between unrelated processes on the same machine

- POSIX Shared Memory

- A standardized set of APIs for creating and managing shared memory segments

- The process that creates the segment and the processes that attach to it can use standard memory operations to read and write to the shared data

- Mach

- An early operating system that was designed around the concept of message passing

- Mach's microkernel architecture relies on messages for all inter-process communication, even for communication between the kernel and its services

- Windows IPC: Windows offers a rich set of IPC mechanisms, including message passing (for GUIs), shared memory, and named pipes

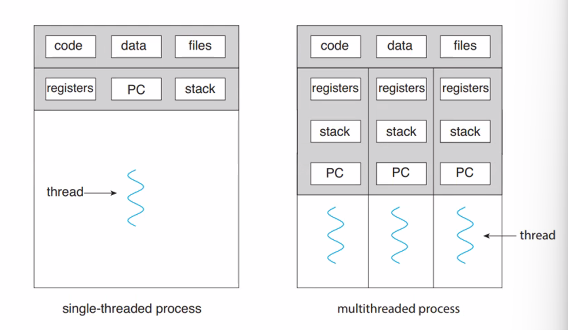

Threads

What is a thread

- a thread, or lightweight process, is a basic unit of CPU utilization

- it is a single sequential flow of control within a process

- threads within the same process share resources

Thread Components

- consists of a thread ID, a program counter, a register set, and a stack

- does not have its own text, data, or heap section

Multithreading

- a multithreaded process can have multiple threads of control

- ex. a word processor might have one thread to display graphics, another to read input, and a third to perform spelling and grammar checks in the background

Thread vs Process

- a process is a heavyweight entity that includes a program's code, data, and resources

- a thread is a lightweight flow of execution

- threads within the same process share the process's code, data, and resources

Why use threads

- threads are used to enable an application to perform multiple tasks concurrently, improving responsiveness, resource sharing, and efficiency on multicore systems

go back to this part of the recording up until multicore programming start

its easier to setup a thread than a process

Scalability

- you can have parallelism, you can assign a thread to a specific parallel core

parallelism and concurrency is not the same, though parallelism is a subset of being concurrent.

Benefits of Multithreading

- responsiveness: a multithreaded application can remain responsive even if part of it is blocked or performing a lengthy operation

- resource sharing: threads share the same memory and resources of their parent process, making it more efficient than creating new processes

- economy: creating and switching BETWEEN THREADS is faster and less resource-intensive than creating and switching BETWEEN PROCESSES

- scalability: threads can run in parallel on multicore processors, taking full advantage of the hardware

segue to multicore programming

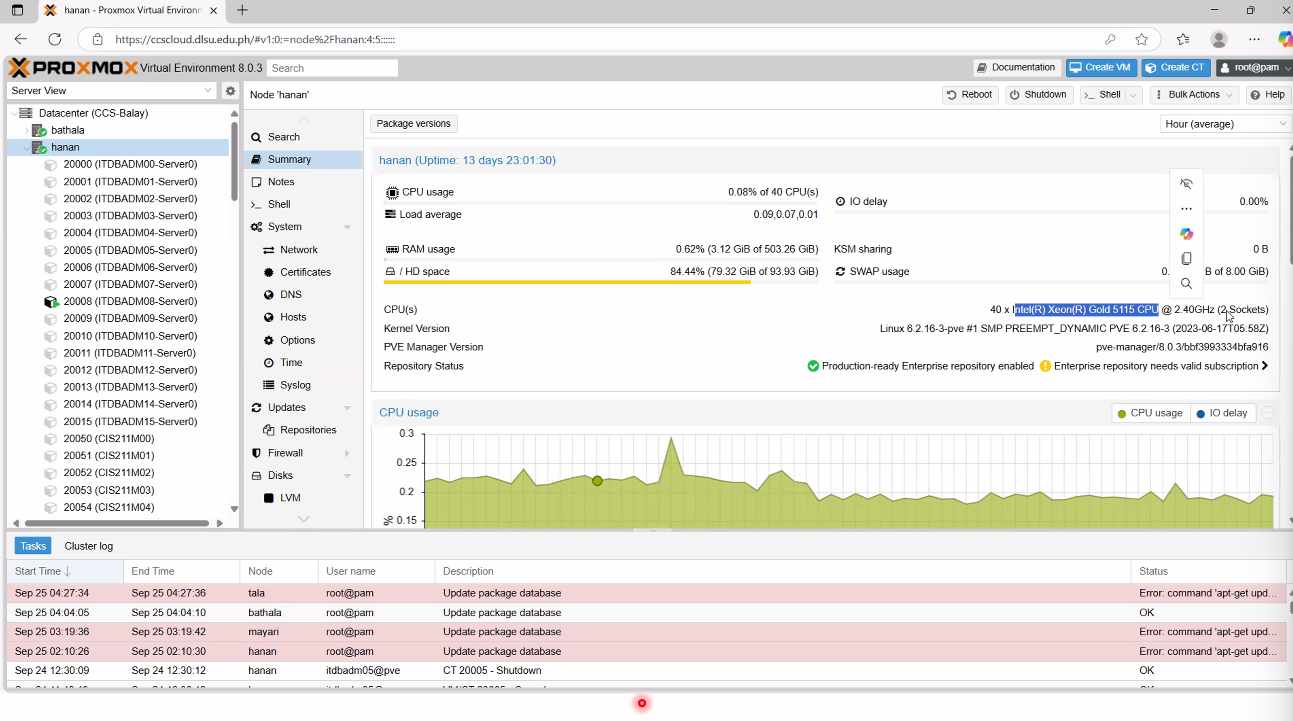

Multicore Programming

- 40 CPUs, Intel Xeon Gold 5115 Processor with 2 cores/sockets

Multicore system vs Multiprocessor

- a multicore system has multiple processing cores on a single physical chip

- a multiprocessor system has multiple physical chips, each with one or more cores

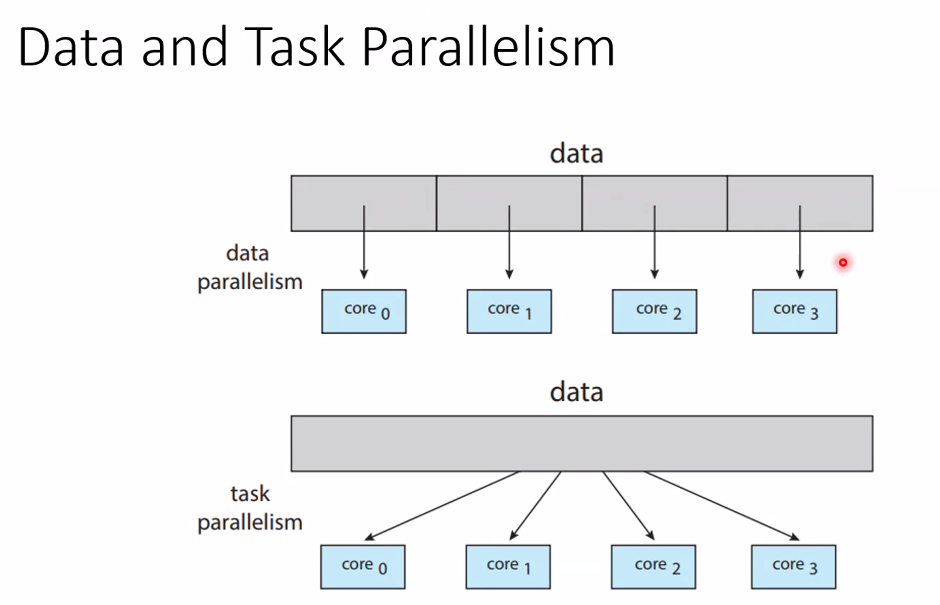

- Parallelism: implies a system can perform more than one task simultaneously

- Data Parallelism: distributes subsets of the same data across multiple cores, each performing the same operation

- each core uses the same instruction

- BUT each core operates on a subset of data

- this is called Domain Decomposition

- useful in ML training

- Task Parallelism: distributes different tasks to different cores

- each core operates on the same data

- BUT each core uses a different set of instructions or a different program

- useful in operations where data has to be processed in stages --> pipelining

- this is called Functional Decomposition

- Data Parallelism: distributes subsets of the same data across multiple cores, each performing the same operation

Challenges of Multicore Programming

- dividing activities: how do you break a task into multiple, concurrent subtasks?

- balancing: how do you ensure that the subtasks perform an equal amount of work to maximize efficiency?

- data splitting: how do you split the data accessed by the subtasks?

- synchronization: how do you coordinate threads so they don't interfere with each other?

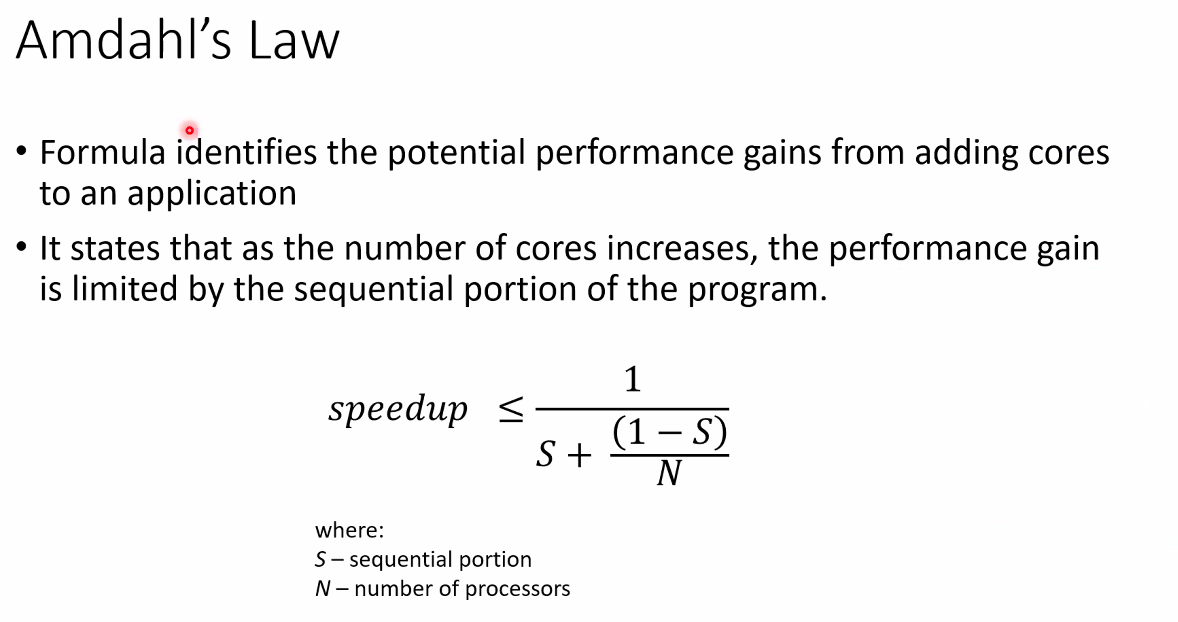

Amdahl's Law

- formula identifies the potential performance gains from adding cores to an application

- states that: as the number of the cores increases, the performance gain is limited by the sequential portion of the program

- a speedup can be calculated by knowing the sequential portion plus the number of processors you can have

ex.

100% program

30% remains sequential

70% is now in parallel

N = 2 processors

speedup = 1 / (0.3 + (1-0.3)/2)

speedup = 1 / (0.3 + (0.7)/2)

speedup = 1 / 0.65

speedup = 1.5 potential speedup

Multithreading Models

Types of threads:

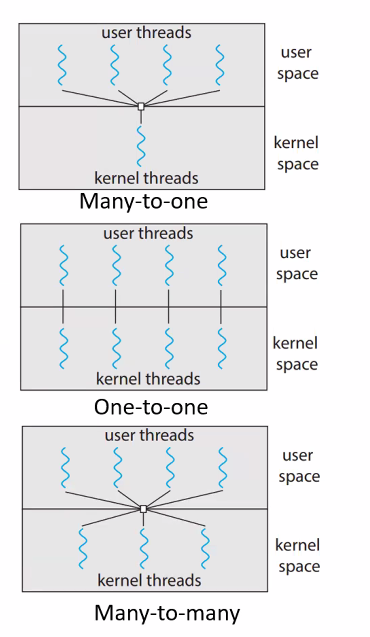

- Many-to-One: many user threads map to a single kernel thread

- User-Level threads: threads are managed by a user-level library without kernel support

- Kernel-Level Threads: threads are managed by the operating system kernel

Mapping Models

- Many-to-One Model: maps many user-level threads to a single kernel thread

- many-to-one isn't used that much anymore

- efficient but if one thread makes a blocking system call, the entire process is blocked

- One-to-One Model: maps each user-level thread to a kernel thread

- windows uses one-to-one because it's easier to implement

- allows for true concurrency on multicore systems and avoids blocking the entire process

- GPT: Concurrency is the ability of a system to handle multiple tasks at the same time in an overlapping manner.

- Many-to-Many Model: a flexible model where many user-level threads are mapped to a smaller or equal number of kernel threads

- the OS can create as many kernel threads as needed, providing a good balance of efficiency and concurrency

- many-to-many is more flexible

Thread Libraries

- a thread library provides the programmer with an API for creating, managing and synchronizing threads

Primary ways of implementing a library

- User-Level Library: provides no kernel support, and all thread management happens at the user level

- Kernel-Level Library: provides a direct API for the OS's kernel threads

Common Thread Libaries

- POSIX Pthreads: widely used, platform-independent API for thread creation and synchronization

- is a user-level library that can be used on many operating systems

- you have to recompile the code still if you want it to work on another platform (bin files and format is not the same across Windows/Linux/Mac, etc.)

- Win32 Threads: a kernel-level library provided by the Windows OS

- Java Threads: managed by the Java Virtual Machine (JVM)

- the JVM typically uses the underlying OS's threading model

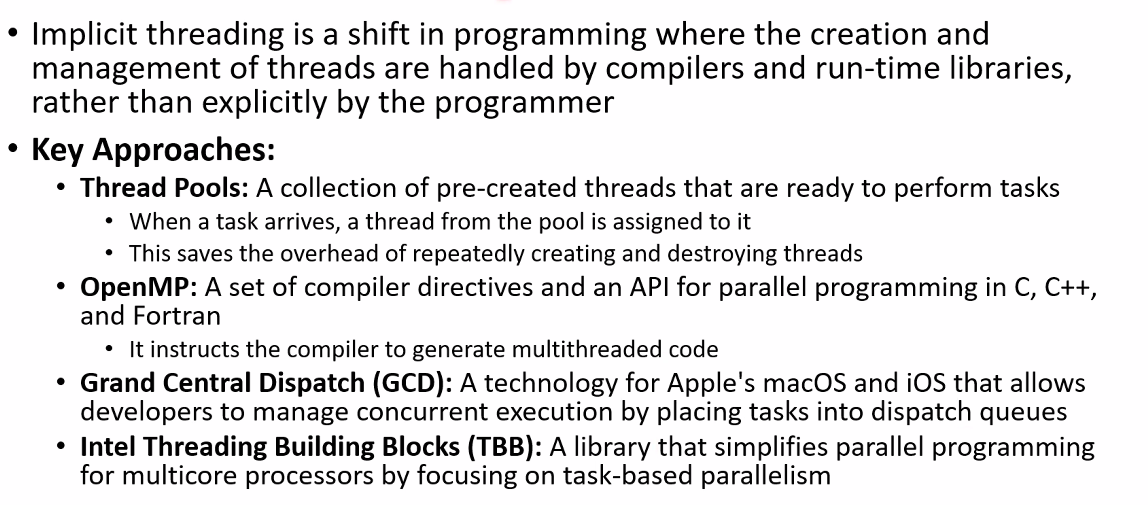

Implicit Threading

- a shift in programming where the creation and management of threads are handled by compilers and run-time libraries, rather than explicitly by the programmer

Key Approaches:

- Thread Pools:

go back to this part for extra notes

Issues in Threading

- the fork() and exec() System Calls: the behavior of fork() can be problematic in a multithread program

- does fork() duplicate all threads or only the one that called the fork()?

- Most systems provide a mechanism to decide

- Signal Handling

- a signal notifies a process that an event has occurred

- in a multithread program, it's not always clear where the signal should be delivered (to which thread)

- that's why you do polling if you want a thread to check if a keyboard has been hit or not

- Thread Cancellation

- termination of a thread before it has been completed

- done through 2 ways:

- Asynchronous Cancellation: terminates the target thread immediately

- Deferred Cancellation: allows the target thread to periodically check if it should terminate, providing a chance to clean up resources before exiting

- Thread-Local Storage (TLS): unfinished

Operating System Examples

Code Demo - Threading

nothing here