STCLOUD_virtualization fundamentals and concepts, types of virtualization

This note has not been edited yet.

Virtualization Fundamentals and Concepts

Traditional Vs Virtualization Recap

- Traditional

- has to be dedicated resources

- one or few services/applications are installed

- single OS

- issues in compatibility because if your OS is Win10 and you have another system with Linux, there will be different firewalls

- you will literally need separate physical computers

- physical setup/hardware

- we can't fully maximize the resources in our machine

- expansion → we want to have extra space in case

- sudden spikes in resource utilization → sometimes Shopee will have spikes in resource utilization during Mothers day, Christmas, etc. a single machine will not have the capability to handle the amount of resource usage, when most of the time the actual usage is like 10% or so

- decrease in utilization during downtimes

- we can't fully maximize the resources in our machine

- has to be dedicated resources

- Virtualization

- Instead of having to buy many small machines, you can just buy one single powerful machine with high amounts of resources

- if you buy a 32GB flash drive, 16GB, and 64GB. the cost of the 16GB is 1000k. the 32GB will not be 2000 but 1500php, and 64GB is 2200php. It's cheaper to buy a higher amount of resource compared to the lower amount of resource -> SO IT COMES OUT CHEAPER than having many physical machines

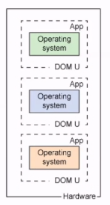

- Host machine (where the hypervisor is) and 1+ guest machines

- the physical machine is the host

- the virtual machines are the guest instances

- flexibility, scalability

- "pooled" resources among the guests

- Instead of having to buy many small machines, you can just buy one single powerful machine with high amounts of resources

- Cloud

Properties of Virtualization

the amount of advantages usually make up for the disadvantages.

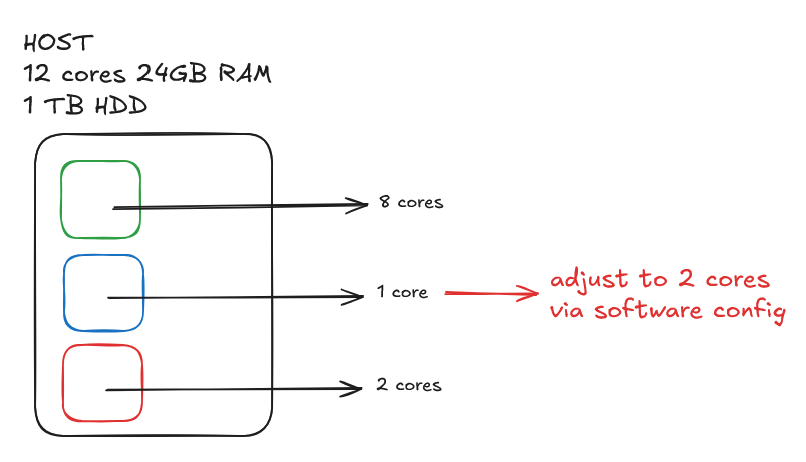

- virtual machines are software → you can just adjust resources on the fly.

- containers don't have to be turned off because it's alongside the OS

1. Partitioning (resources)

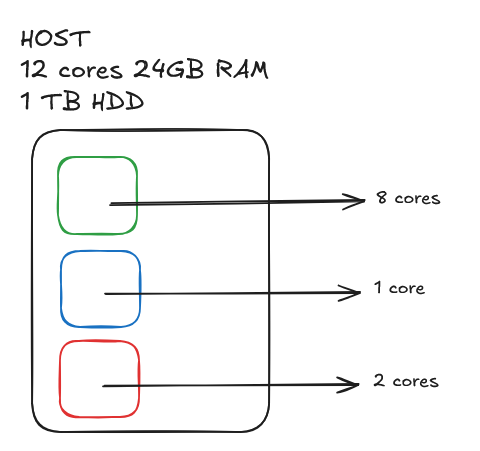

you can make multiple instances of machines within the host machine, you have the flexibility on how you want to divide your resources

- divide resources between virtual machines

- run multiple OS or virtual machines in a single physical machine

- for improving compatibility (if you're on Windows you can run Linux and Mac applications using virtualization)

- you can ADJUST certain resources from the original host based on what you need

- in the real world, most often, resource utilization is unpredictable. sometimes your task may require more or less. sometimes in the industry, your computer can't keep up with your requirements.

- monitoring tools → to look at resource utilization, gives you an idea which one to adjust

- baselining → having an idea of what your normal usage is, lets you know if you have to scale up or scale down

side note on Cloud Computing

- Automatic scaling → the autoscale capability allows us to automatically run monitoring tools and you can create rules to scale up or scale down your resources

side note on Traditional

- Cold boot → turning the computer off and then on again, and then all the applications and services have to startup

- sometimes cold boot is not an option if you're running, for example, a banking application

they are considerations when choosing traditional over virtualization, tells you whether you need to scale up or down to maximize your resources (would affect costs also)

2. Isolation (risk and conflict dependencies)

you isolate the particular machine from another → isolation of conflict, reduction of risk

- fault isolation at the hardware level

- if one VM has issues like crashing or malware, it's not going to affect the other VMs. great for updates and security.

- isolated updates → patching one will not patch all of them

- reduce risk of dependencies and conflicts

3. Encapsulation (treating VMs as data)

packets are data that are sent to a network but they are encased with more data that helps it get to the other network/destination → virtual machines are also encapsulated because they are 1s and 0s. they are configurations and data. → VMs are treated as software

- move and copy virtual machines as files, create snapshots, modify versions, etc.

- improved portability

- if you want to copy an entire computer, you have to replicate the drive but you also have to replicate the resources which could be costly

- sandboxes → when you wanna have a product or application, you would have a production environment and development environment. the production and development environment should be the same so they use sandboxes to replicate both setups through sandbox templates.

4. Hardware Independence (it works in any physical machine)

- provision or migrate to any server without the need to perform reconfigurations on the virtual machine

- the virtual resources are independent of the hardware. if you have AMD and another person has Intel CPU and different DDR4, the virtual machine will still run to some extent

- if the data of your virtual machine is on a shared network storage, you can transfer it to another server even though the hardware is different. it will still work.

- virtual resources are independent of the hardware

- identified as CPU, memory, disk space, network (general categories, they don't tell u that it has to be Intel or AMD, Wifi, etc.)

- it doesn't care if it's threads or actual cores → better compatibility

Types of Virtualization (44:31)

back then it was just Server, Storage, and Network. amt of types is dependent on the internet source it's different lol

- Server/Compute <-

- Desktop

- Storage <-

- Data

- Network <-

- Applications

1. Server/Compute Virtualization

- one of the first that were developed

- enable multiple OS to run on a single physical server; virtualizing the computer in itself

- partitions a physical server into multiple virtual servers via software

- benefits

- greater IT efficiencies

- instead of multiple physical machines, it's just a single machine

- resources are shared so you can maximize the resources

- reduced operating costs - you're just managing a single, physical machine

- reduced space consumption, heat regulation

- maximization of resource

- faster workload deployment → just have to click a bunch of buttons and it's already made

- agility → if you need resources, it can come quickly

- increased application performance → with a physical server, the data has to physically pass the server. if it's a virtual server, the speed is much faster because it's within the same computer and transfers through the RAM

- higher server availability → because of encapsulation, servers are treated as software, if you want to ensure your server is running, you can have two copies of the server running at the same time

- user wants to access website → their data is sent to load balancer → LB sends an appliance that forwards your traffic to servers (all running the same application) → all individual servers are connected to the same database

- database can have a read-replica (if you're just reading data read from there.)

- eliminated server sprawl and complexity

- server sprawl:

- racking and stacking of servers

- greater IT efficiencies

- these are a bunch of VMs (some VMs can have more cores or RAM compared to others)

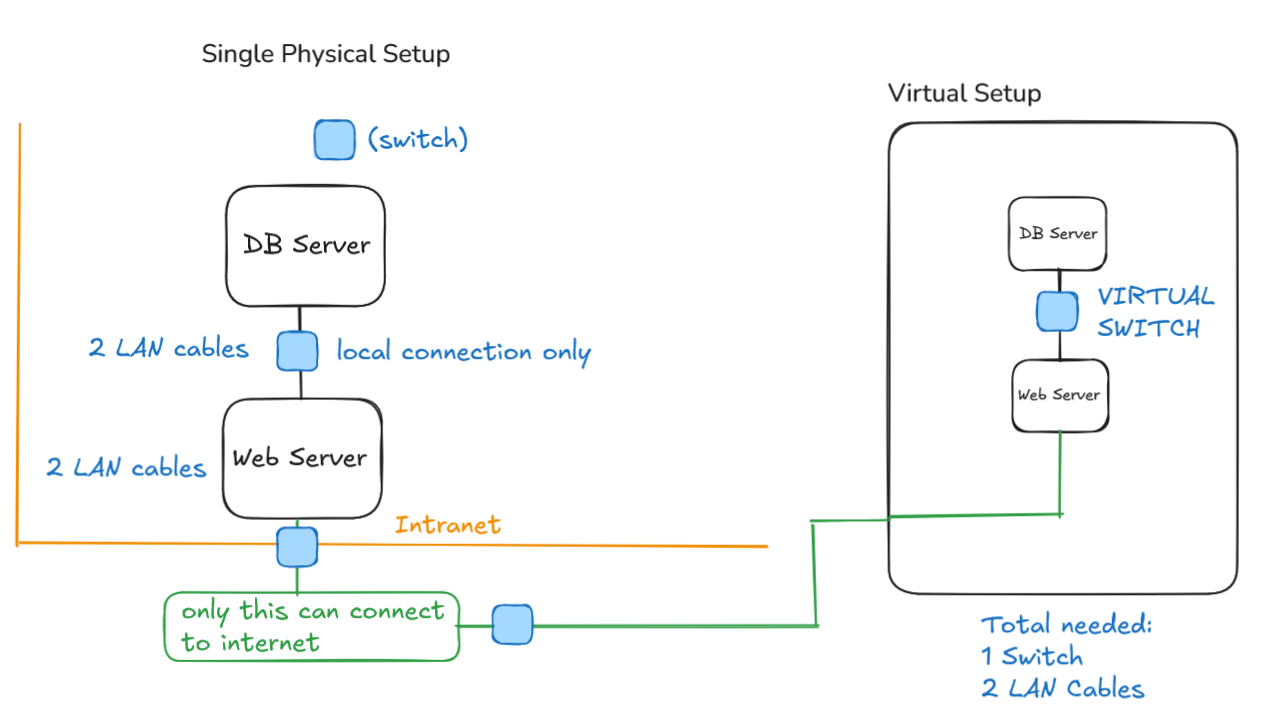

2. Network Virtualization

- servers have limitations based on the hardware (if your server has 8 network interface cards in the physical setup, you can only have 8 servers. in CCSCloud, there are 4 network interface cards but the server can house a thousand VMs, as if there's a thousand network interface cards)

- virtualization lets us have many virtual networks

- completely reproduces a physical network, allowing applications to run on a virtual network as if they were running on a physical network

- achieves greater operational benefits and all the hardware independencies of virtualization

- hardware independence: even if you have 4 network interfaces, you can have 100 vms with 2 NICs each

- 1 NIC → 1GBps, but in the VM its like 10GBps because it's all in the RAM and CPU of the physical server

- network virtualization presents logical networking devices and services to connected workloads (virtualizing the network portion) such as:

- logical ports → not limited to the physical ports (although there will be a bottle neck if all your VMs have to connect to the internet, local connection will not be an issue)

- switches → virtual networks

- routers

- firewalls

- load balances

- VPNs and more

switches:

bale you won't need the physical devices anymore, just virtualize it, add a virtual interface for it.

3. Desktop Virtualization

BYOD → bring your own devices, companies will usually provide your own work laptops

WFH → work from home

- deploying desktops as a managed service enables IT organizations to respond faster to changing workplace needs and emerging opportunities.

- you connect to the VPN of the company and then they give you a remote desktop

- the desktop in the cloud is usually setup with all of the applications that you need

- virtualized desktops and applications can also be quickly and easily delivered to branch offices, outsourced and offshore employees, and mobile workers using tablets

- typically categorized as Local Desktop Virtualization or Remote Desktop Virtualization

- makes desktop management efficient, secure, and saves money on desktop hardware

- virtualization makes desktop management efficient, secure (preconfigs, proper management by IT dept), saves money